Salesforce; it’s a behemoth, a massive product used by a massive percentage of companies to handle all corners of their business. With that size comes complexity, inconsistency, and woefully inadequate documentation and support.

And so I provide to you a tale of battling this titan, and how over the course of months we managed to tame the beast, beat it into submission, and make it feed data from external systems into accounts within its mighty belly.

The Quest

Salesforce is used by our Sales (you don’t say?) and Customer Success teams to manage our relationships with existing and prospective customers. One thing which Customer Success spent a lot of time doing is checking how much customers are using the product, and whether they are approaching, or even exceeding, their paid for usage levels. This was a hugely time consuming process of creating secure production database clones, running queries against these, taking the data from these queries and manually entering it into a large spreadsheet to then manually check.

Slow, error prone, only able to be done once a month or so, and so quite often would highlight customers who had already exceeded their usage limits rather than give forewarning that one was getting close and so should be contacted to discuss.

The question was asked “Could we get this data into Salesforce automatically, and daily, so that we don’t have to spend all of our time doing this manually?”

The Solutions offered

The dev team said “Well, we can make an API endpoint to our systems to query and pull this data, easy peasy”. Then, surely, we can make Salesforce call that endpoint and Bob’s your Mother’s brother.

Well, the endpoint part was easy peasy. The APIs were designed and built within a sprint, and we created an AWS Lambda and API Gateway to allow Salesforce to access them.

We looked at Salesforce, we reached out to our contacts, and they suggested a few less than useful options to call this endpoint and access the data:

- Salesforce Connect – This may have worked, but when the Salesforce rep themselves tells you it comes with a hefty price tag, then it becomes something that’s not appealing, being that it would up our ongoing costs substantially

- Einstein – Again, another costly add on, again upping ongoing costs

- And then this solution:

a. Export from your database to a central repository a CSV file.

b. Use the command line in windows to schedule a daily job to upload using the downloadable data uploader tool. Or alternatively look at available solutions such as Workato, Zapier, Jitterbit to automate the process.

Salesforce Rep.

So. We had been given two very costly options, and one ridiculous method involving creating CSV files being exported from a system of ours and then using third party tools to pick these up. This was just unacceptable. So, we poked around Salesforce ourselves, and their documentation and discovered External Services, which from its description was tailor made for what we wanted:

Use External Services to connect to a service of your choice, invoke methods based on the external source via a flow, and import data from the service into Salesforce—all with the help of an easy-to-use wizard.

https://help.salesforce.com/articleView?id=external_services.htm&type=5

Why we were not suggested this as an option to begin with is baffling.

The Solution Chosen

With our AWS API Gateway endpoint ready, and External Services as our chosen way forward we marched into battle.

The way you setup an External Service is very clean. You provide a Swagger API definition for your endpoint. Salesforce then parses this and provides access to the inputs and outputs to your endpoint.

It’s at this point that I’ll spare you the months of back and forth with their “Premier” paid support trying to fault-find why Salesforce first wasn’t accepting our Swagger API definition, which was finally solved through the fantastic support given by a product manager who reached out after a few of us were having the same types of issue and were writing about it on the Salesforce forums.

So, here is a working Swagger definition for an endpoint that takes an array of objects that look like:

{"tenantKey": "fred", "contractStart": "2016-07-22", "billingPeriodStart": "2019-07-22"}And gives back an array of objects that look like:

{"tKey":"fred","cStart":"2016-07-22", "bStart": "2019-07-22", "usage": {"ATTRIBUTE1": 1234,"ATTRIBUTE2": 1334} }The Swagger definition ends up being (some things changed for the sake of company privacy):

{

"swagger": "2.0",

"info": {

"description": "Billing Data",

"version": "2020-02-19T21:59:23Z",

"title": "billingAPI"

},

"basePath": "/default",

"schemes": ["https"],

"paths": {

"/getBilling": {

"post": {

"consumes": ["application/json"],

"produces": ["application/json"],

"parameters": [

{

"in": "body",

"schema": {

"$ref": "#/definitions/MBInput"

}

}

],

"responses": {

"200": {

"description": "OK",

"schema": {

"$ref": "#/definitions/MBOutput"

}

},

"500": {

"description": "500 response"

}

}

}

}

},

"definitions": {

"BInput": {

"type": "object",

"properties": {

"tenantKey": {

"type": "string"

},

"contractStart": {

"type": "string"

},

"billingPeriodStart": {

"type": "string"

}

}

},

"BOutput": {

"type": "object",

"properties": {

"tKey": {

"type": "string"

},

"cStart": {

"type": "string"

},

"bStart": {

"type": "string"

},

"usage": {

"type": "object",

"properties": {

"ATTRIBUTE1": {

"type": "integer"

},

"ATTRIBUTE2": {

"type": "integer"

}

}

}

}

},

"MBInput": {

"type": "object",

"properties": {

"tenants": {

"type": "array",

"items": {

"type": "object",

"$ref": "#/definitions/BInput"

}

}

}

},

"MBOutput": {

"type": "object",

"properties": {

"tenants": {

"type": "array",

"items": {

"type": "object",

"$ref": "#/definitions/BOutput"

}

}

}

}

}

}

Important things to note

- This will only work with Enhanced External Services. So you have to have this enabled in your environment. The older version is very limited in the types of API definitions it can work with.

- The thing that Salesforce support never told us, stopped us being able to use External Services at all, and gave no useful error messages, and was only solved through the help of the Product Manager?

Salesforce has a 40 character limit on quite a lot of the data that is used in it. This includes ‘API Name’, which is the unique name given to anything in Salesforce that you reference from another part. When you import an External Service into Salesforce it assigns names to all the input and output variables based on a combination of your external service name, the function and what the objects within your Swagger definition are, and if those things strung together exceed 40 characters, it just won’t work. Won’t tell you that you’ve exceeded that limit anywhere, just will fail to work. And so that’s why the Swagger above has less than descriptive attributes like MBOutput, BOutput, tKey and cStart, because originally it had descriptive attribute names (as you usually should), but these combined together exceeded the limit.

- Don’t use underscores in any of your variables. Salesforce mangles them and renders those attributes unusable. (One of our ‘ATTRIBUTE1’, ‘ATTRIBUTE2’ attributes originally had one)

- Salesforce documentation will tell you there’s a right way, and a wrong way to define arrays in Swagger documents: https://help.salesforce.com/articleView?id=enhanced_external_services_considerations.htm&type=5

Unfortunately what they say doesn’t work. They say you need to define arrays like this:

{

"swagger": "2.0",

...

"schema": {

"type": "array",

"items": {

"$ref": "#definitions/MyObject"

}

}

...

"definitions": {

"MyObject": {

"type": "object",

"properties": {...}

}

}

}

So, the originally Swagger had this

"/getBilling": {

"post": {

"consumes": ["application/json"],

"produces": ["application/json"],

"parameters": [

{

"in": "body",

"schema": {

"type": "array",

"items": { "$ref": "#definitions/BInput" } }

}

],

....

And Salesforce accepted it, but then couldn’t access the incoming and outgoing data objects properly.

This is solved via doing it like I have in the full Swagger, by defining a single object as your input (or output), and then that object has an attribute which is an array of your objects.

Input:

{ "in": "body",

"schema": {

"$ref": "#/definitions/MBInput"

}

}

...

Definition:

"MBInput": {

"type": "object",

"properties": {

"tenants": {

"type": "array",

"items": {

"type": "object",

"$ref": "#/definitions/BInput"

}

}

}

}

Go with the Flow

Now we get to the next fun bit of how to use this External Service within Salesforce. That’s where Flows come into it.

Flows in Salesforce are pretty powerful, and allow automation of many different processes within Salesforce, however they are not as clear as they could be when you start trying to make them do what you want.

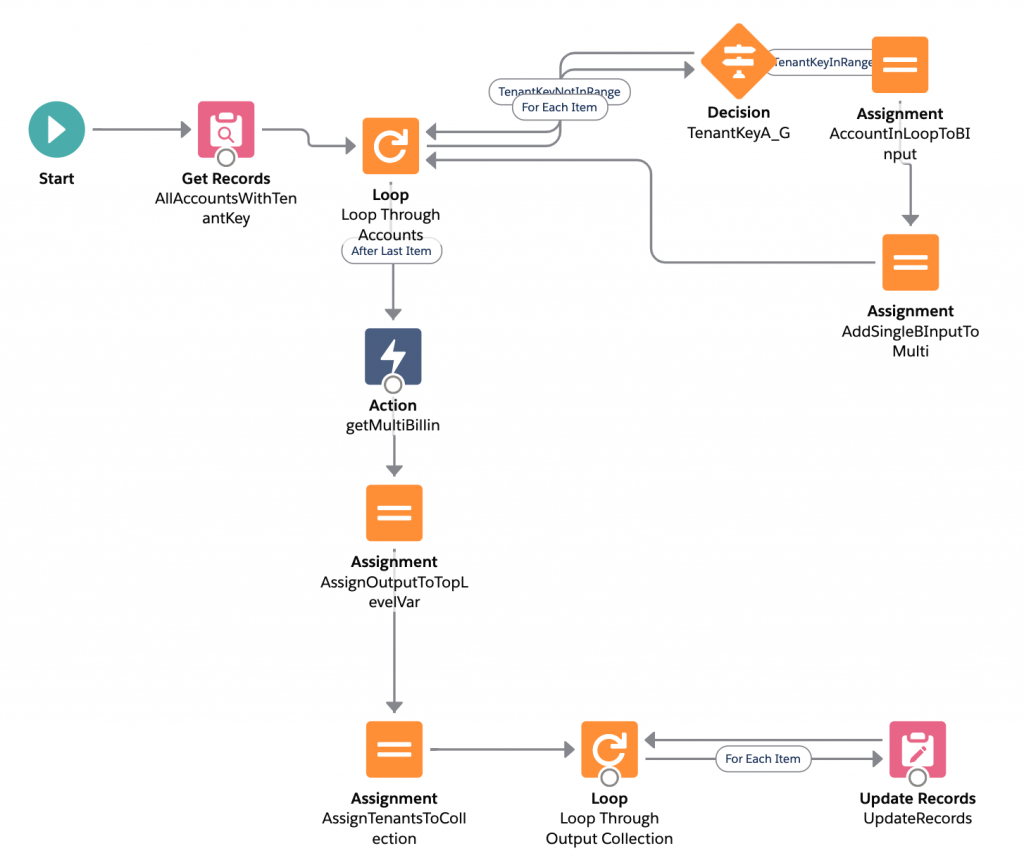

The Flow shown above is what I currently have to update all of our accounts with billing data from our API endpoint. The process is:

- Get all Accounts which have the field required to do the lookup on our API populated (Tenant Key)

- Loop through this collection, and for each item in the collection

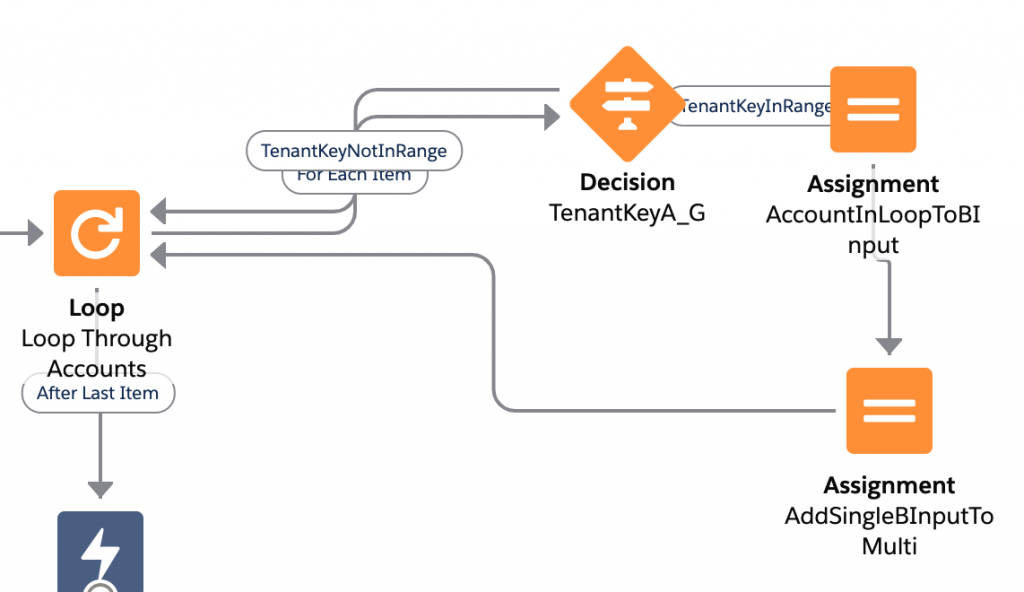

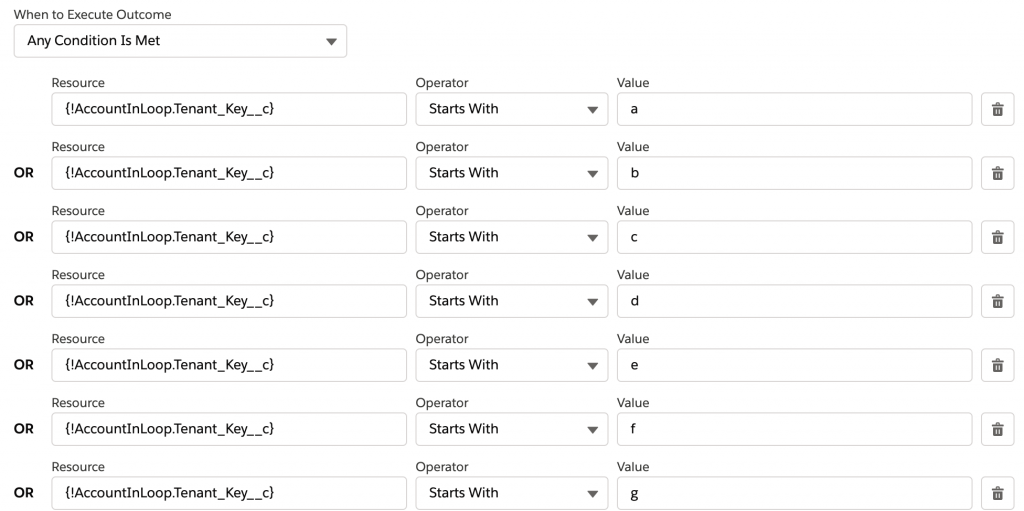

- ‘Decide’ whether the tenant key starts with one of the letters this flow runs against. (More on this later)

- Take the attributes from the account and put them into an instance of the type of object the API accepts (BInput from the Swagger)

- Add this BInput object to a collection of BInputs, to make a collection ready to send to the endpoint

- When all the accounts have been looped through, call the endpoint with the collection of BInputs (MBInput from the Swagger)

- When that returns, take the 200 response and assign it to the MBOutput variable as described in the Swagger

- Now loop through all returned values and for each item

- Match returned values to existing account by the Tenant Key, and then update the account’s billing values from what came back from the endpoint

Important things to note

- Sometimes Salesforce logic feels backwards. I spent some time lamenting that there was an ‘Is Null’ option, but no ‘Is not Null’, until I realised that you’re not using the operator to select a field that ‘Is Null’, but you’re using Is Null, and then say whether you want that to be true/false

- Close your loops! Originally I did not grasp the fact that you have to link whatever you’re doing within your loop back to the loop again, otherwise it won’t continue, it’ll just run once and then stop. This is probably obvious to most people, but didn’t dawn in me initially, I just assumed anything under the loop would run once per item. This also goes for any case a decision means you’re skipping an item, you need to link back to the loop again to continue on.

- Why the limiting the Flow to just Tenant Keys A-G here? Because Salesforce has Process Limits, and one of those is “Total number of SOQL queries issued 100“. And we have a good number more than 100 accounts that this process needs to update, and the original flow was getting to 101 accounts and then quitting out because it had hit this limit. Arbitrary? Yes. Annoying? Yes? Is this a good solution to this? Nope, but I’m not sure how better to solve this.

- Why do the limiting of Tenant Keys within a decision block? Why not just select only these accounts at the start of the flow? Well, because I couldn’t work out how to do an ‘OR’ there. The documentation says that if you add a number of conditions on the same field it will be considered an OR, where conditions on different fields will be considered ANDs. In my testing though, as soon as I tried this I ended up with no accounts at all selected. Decision blocks allow for OR conditions, and so I’ve done it this way.

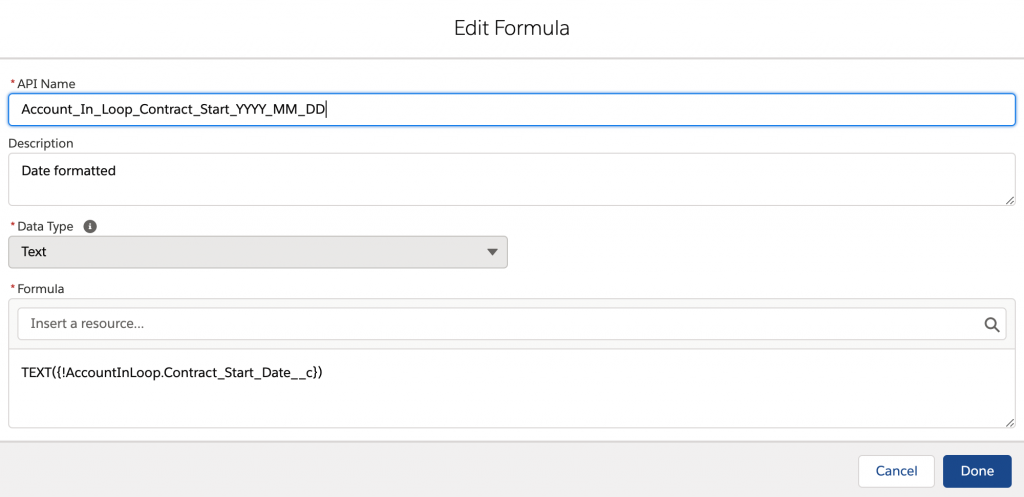

- Assignments and formatting can be not obvious too. We pass date fields, and our endpoint wants them in the form YYYY-MM-DD, and this is not how a date comes across if you straight map it in Salesforce. So, you have to create a formula first. The TEXT function provides the date format we wanted by default. (You can provide a formatting string to make it conform to what you may need as well). Ensure that your formula is against the correct resource for where you’re using it in your flow. Here it’s the first loop, and against the Account, so is working on the ‘AccountInLoop’ variable defined within the loop as the ‘Loop Variable’

What doesn’t work?

So, this got the Salesforce beast largely tamed. We can go and poke it, and it’ll go off and get the information we need and then update its values. Already a massive time saving, where it takes a minute or so to go to the three flows and run them.

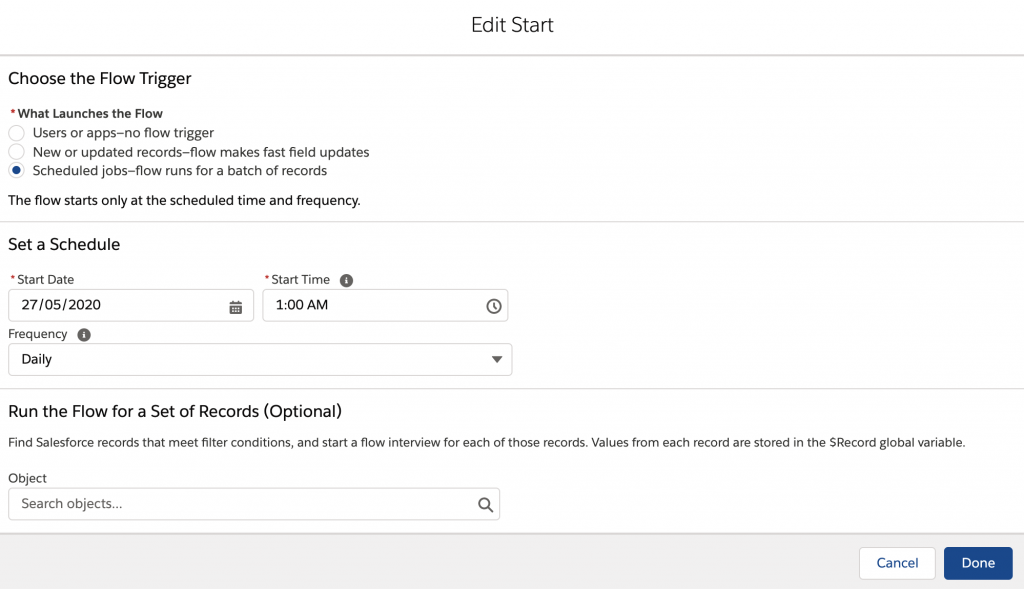

However, we want this to just automatically run every day. This should be simple. The start block on the Flow has the ability to do this:

Easy job. Done. Walk away happy.

Except, no. When I do this the flow does start at the given time, but does nothing. It says that it ran, but doesn’t actually trigger the flow to do anything, and gives no reason in logs, even with debug logging turned on. I’ve been told there needs to be a ‘Pause’ action in the flow to make it work, due to transactions. However, putting a pause in just makes it sit for a minute, and then still does nothing.

So, that’s our biggest thing, and is the most annoying because the functionality is supposed to be there, but isn’t.

It would be wonderful to not have to do this ridiculous splitting by tenant start letter. Having to break this into three flows purely as a work around to a pretty arbitrary limit is frustrating.

At the end of the flow we would like to update another value on our account using a formula which uses a value stored on an asset of the account, but we have no idea how to access the associated assets with an account within a formula. Doing this via another flow triggered by the first, or triggered by the fields being updated would be fine, but it’s very unclear how to get assets associated with accounts to work with.

Final words

This was my introduction to Salesforce, and it hasn’t been a pleasant one. It’s obviously a massive and capable tool (It has its own programming language for god’s sake!), but through a combination of things, something that should have taken a couple of weeks maximum has so far taken over 8 months of elapsed time, and it’s still not working as we’d like it. This would be via a number of issues with Salesforce as a product, and the team behind it:

- Having account managers who suggest either costly add ons, or horrendously manual processes to perform something that there is an in-built tool to do isn’t good enough

- When we ran into problems we asked for support, were told our current support wasn’t the high enough level to get answers, and so paid for the privilege of being able to get answers, only to have a support team that floundered around, takings days and weeks to respond or followup to queries, who escalated it multiple times, and still never found the issue. This took months. From using the Salesforce forums and discussing the issues with others having similar problems, a Product Manager from Salesforce reached out, and through direct email contact with her the problem was fixed in a couple of days. If you’re going to charge a premium for your support, that support better be good. And Salesforce ‘Premier’ support is not good. (See AWS for how to do this infinitely better)

- The initial problem was the 40 character limit issue. This could have been solved with no support interaction, and incredibly quickly, if the system alerted me to the problem. If there is a limit like this then ERROR as such, don’t fail silently.

- The documentation for External Services, and how to handle inputs/outputs with arrays is wrong, it doesn’t work, and caused me to waste even more time. It didn’t error when given a Swagger with arrays defined like the documentation says, but it also doesn’t expose those objects in app. If the documentation had told me to create those top level objects as I now have, that would have saved hours of frustration.

- The process of debugging scheduled flows is horrible. You cannot run a flow in debug mode when its scheduled, and so the only thing you can do is turn on a debug log, schedule it to run in the next 15 minute window, wait, go and find the log, discover it doesn’t tell you anything, and then scratch your head. It’s useless, and another case of Salesforce making things harder than they need to be through a complete lack of transparency as to what’s going wrong.

- The 100 record update limit is annoying. At least this one did end up logging out into a debug log, meaning I knew why it stopped. If there are going to be these arbitrary limits, give useful methods to perform processes that need to process over these.